I had two VS projects, one dating back to january (whose renders are available in my previous post about SSGI) and the other modified in march.

I didn't remember exactly which modifications I made, but it looks different than the first one, although it suffers from the same problems.

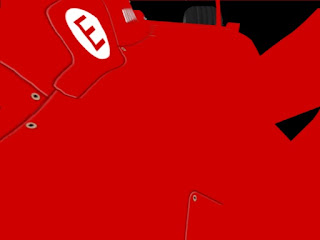

In my previous post I spent some words about the impossibility to fine tune SSGI.. let's see again one of the shots.

As you can see, there are many problems:

1- "E" gets blurred. Since I gather samples around a pixel and every surrounding pixel emits light, the resulting image looks "haloed".

2- There's fake lighting. By fake fighting I mean the shape gets too much light. I'd like SSGI not to start a lighting war against a standard lighting model. SSGI should add a modest contribution, it's not supposed to create fake lights.

3- You can clearly see an halo representing my filter kernel size, this is awful and gets worse when you move the camera. This is due to the SSAOish nature of the algorithm, but there are some tricks to reduce this effect.

4- This is impossible to see, as it's related to the way I'm combining the diffuse and SSGI buffers. Since the contribution is too much heavy, I've been forced to scale SSGI buffer AND blend it with diffuse buffer. I'd like to be able to simply add SSGI buffer.

Since the algorithm suffers from the aforementioned problems, the results are:

1- it's impossible to clearly see a fine detail of a texture. That blurred look could be ok for a dream-like scene, but it's not going to help you render realistic scenes.

2- coherence with local lighting is lost. Lights supposed to gently illuminate geometry produce too much bright areas. It's going to be a nightmare to tune it.

3- the effect is quite awful when moving the camera. Do I need to say more?

4- the artist isn't able to control the overall look of the scene.

I took the modded version and looked for possible solutions. After some work and tests, I came out with a version I think it's better than the previous one. It still suffers from some problem like haloing, but I've some ideas to furtherly improve it.

My goal was to create a "gentle" SSGI shader adding subtle details to the scene.

No SSGI

SSGI

It's hardly noticeable, but things gets better by adding a simple dot(n,l) lighting to the reference image. Here's a closer shot.

No SSGI

SSGI

The "cool" look of the old shots, a-la photon mapping, is still here but is noticeable when looking at small, flat, details:

No SSGI

SSGI

I also ran a simple test on "Sponza Atrium". SSGI haloing is still here and is a bit too bright but as I said now it's easily tweakable.

No SSGI

SSGI

I'm planning to improve the algorithm, in particular I'd like to remove halos and to integrate it into a full-featured render system.